Black box AI refers to AI systems especially those powered by complex machine learning models where the input and output are visible, but the internal decision-making logic is not.

In other words:

You know what the AI decided, but not why.

Imagine getting denied a loan, and when you ask why, the bank just says, “The machine said no.” That’s black box AI in action.

Quick Comparison:

| Term | Meaning |

|---|---|

| Black Box AI | AI systems that cannot fully explain their internal reasoning |

| White Box AI | Transparent, explainable AI models where decisions can be traced |

| Blockbox (sometimes used) | Slang or typo variation of “black box” among devs |

| BlackAI | Often used informally to refer to advanced, opaque AI models |

Why Is AI Still Opaque in 2025?

You’d think that with all our innovation, AI would be fully transparent by now. But it’s not. Here’s why black box AI persists—even in 2025:

- Deep learning models are complex. Neural nets often involve millions of parameters. Tracing their logic? Not easy.

- Speed vs explainability. Companies prioritize fast results. Explainability often slows things down.

- Proprietary algorithms. Tech giants guard their secret sauce, even if it lacks transparency.

And perhaps most importantly…

- Lack of regulation—until now.

Real Story: Meet Blake and Blacky

In a recent viral tweet, a software engineer shared:

“Our R&D AI (nicknamed ‘Blake and Blacky’) nailed lung cancer prediction, but we couldn’t publish it—because we couldn’t explain why it worked better than doctors. No transparency = no FDA approval.”

This story sums up the dilemma faced by researchers and developers. Even when the black box works, if it’s not explainable, it’s not trusted—or even legally usable.

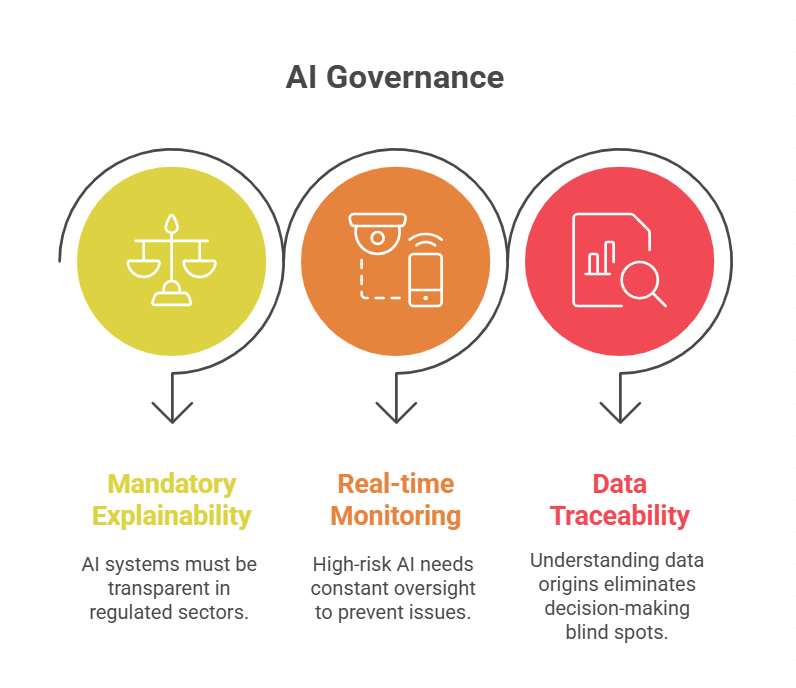

Black Box Künstliche Intelligenz: The EU’s Take

Over in Europe, regulation is catching up. The term black box künstliche Intelligenz (German for “black box artificial intelligence”) has appeared in several high-stakes policy documents, particularly within the EU AI Act and Germany’s updated KI-Sicherheitsgesetz.

Key focus areas in 2025:

- Mandatory explainability for AI in healthcare, finance, and legal industries

- Real-time monitoring for high-risk AI categories

- Data lineage and decision traceability (goodbye, blind spots)

Regulators don’t want to ban black box AI, but they do want guardrails—especially when real people are affected.

Benefits of Black Box AI (Yes, There Are Some)

Despite its cloudy logic, black box AI isn’t all bad. Sometimes, it offers huge benefits—especially when accuracy matters more than transparency.

Pros:

- High performance: Deep neural networks outperform older models.

- Pattern recognition: Finds signals and trends humans can’t see.

- Continual learning: Improves over time with more data.

- Versatility: Powers chatbots, medical diagnostics, fraud detection with extreme accuracy.

Risks of Black Box AI in Production

But these advantages come with serious risks—especially when you’re using black box models in mission-critical workloads.

Cons:

- Accountability gaps: Who’s responsible when the AI gets it wrong?

- Bias amplification: BlackAI trained on skewed data can reinforce racism, sexism, or socioeconomic bias.

- Lack of trust: If users don’t understand results, they won’t trust or adopt the tool.

- Legal exposure: 2025 regulators now let citizens challenge AI decisions—even if they’re “black box” based.

Inside the Technology: How Black Box AI Works

Let’s get a little technical. Most black box AI systems are built on:

- Deep learning (Neural Networks, CNNs, RNNs)

- Transformer architectures (like what powers GPTs or image-to-text AIs)

- Reinforcement learning with opaque reward structures

These systems absorb huge amounts of data, then look for mathematical patterns to produce predictions. But they don’t generate “reasons” in a human-like form.

Unless you bolt on XAI (Explainable AI) tools, the logic stays hidden.

Can We Explain a Black Box?

Sort of. In 2025, Explainable AI (XAI) has led to progress, but it’s still a patch—not a perfect fix.

Top methods today include:

- LIME (Local Interpretable Model-Agnostic Explanations)

- SHAP values (Shapley Additive Explanations)

- Model distillation (Matching a black box with a simpler surrogate model)

- Attention maps in transformer models

But these don’t always give users the “why” they’re craving. Sometimes they help; other times, they’re just window dressing.

How Businesses Handle Blockbox Challenges Today

Leading startups and multinationals in 2025 are taking new routes:

- Audit trails. Every prediction is tracked, stored, and flagged based on confidence level.

- Hybrid models. Use white box logic for sensitive areas (e.g., eligibility) and black box for optimization (e.g., churn prediction).

- User override tools. Humans in the loop (HITL) systems let users reverse or review the AI’s choices.

- Internal education. Employees are trained on the limitations of black box AI and how to respond when it doesn’t “make sense.”

FAQs

Q. Why is it called black box AI?

A. Black box comes from engineering. It describes systems where the inputs and outputs are known, but the internal workings are not visible or understandable. In AI, it refers to models whose decisions can’t be easily explained.

Q. Is black box AI dangerous?

A. It can be especially in high-stakes industries like healthcare, justice, or finance. If a black box system makes a mistake and no one understands why, that’s a big ethical and legal concern.

Q. How do you deal with black box AI in business?

A. By using explainability tools, maintaining audit records, and implementing oversight processes. Hybrid systems combining transparent rules with powerful AI are increasingly popular in 2025.

Q. Can black box AI be explainable?

A. Yes, to an extent. Tools like LIME, SHAP, and model distillation help dissect AI behavior. But full explainability is still an active research problem, especially for large-scale generative models.

Conclusion

Black box AI is powering some of the most promising technologies in the world self-driving cars, disease detection, climate modeling, creative assistants. It’s not going away.But we can demand better oversight, transparency, and responsible development. In 2025, it’s no longer enough to marvel at what AI can do. We need to understand how and why it makes those calls—or at least hold builders accountable for doing so.

CLICK HERE FOR MORE BLOG POSTS

“In a world of instant takes and AI-generated noise, John Authers writes like a human. His words carry weight—not just from knowledge, but from care. Readers don’t come to him for headlines; they come for meaning. He doesn’t just explain what happened—he helps you understand why it matters. That’s what sets him apart.”